In one in every of my previous posts I even have mentioned regarding Custom Robots Header Tags for blogger. If you have got read that post then I hope you guys are aware with its importance in search rankings. Today, I go with a really helpful and should aware blogging term that's Robots.txt. In blogger it's referred to as Custom Robots.txt which means currently you'll customise this file in line with your alternative. In today’s tutorial we'll discuss regarding this term in deep and are available to know regarding its use and advantages. i will be able to additionally tell you the way to feature custom robots.txt come in blogger. therefore let begin the tutorial.

WHAT IS ROBOTS.TXT?

Robots.txt could be a document that contains few lines of straightforward code. it's saved on the web site or blog’s server that instruct the online crawlers to a way to index and crawl your blog within the search results. which means you'll limit any website on your web log from net crawlers so it can’t get indexed in search engines like your web log labels page, your demo page or the other pages that don't seem to be as necessary to urge indexed. continually bear in mind that search crawlers scan the robots.txt file before crawling any website.Each blog hosted on blogger have its default robots.txt file that are a few things appear as if this:

User-agent: Mediapartners-Google

Disallow:

User-agent: *

Disallow: /search

Allow: /

Sitemap: http://example.blogspot.com/feeds/posts/default?orderby=UPDATED

EXPLANATION

This code is split into 3 sections. Let’s initial study every of them at that time we'll learn the way to feature custom robots.txt come in blogspot blogs.1. User-agent: Mediapartners-Google

This code is for Google Adsense robots that facilitate them to serve higher ads on your blog. Either you're using Google Adsense on your blog or not merely leave it because it is.

• User-agent: *

This is for all robots marked with asterisk (*). In default settings our blog’s labels links square measure restricted to indexed by search crawlers which means the online crawlers won't index our labels page links owing to below code.

Disallow: /searchThat means the links having keyword search simply once the name are going to be neglected. See below example that could be a link of label page named SEO.

http://www.learn-tipandtrick.blogspotcom/search/label/SEOAnd if we delete Disallow: /search from the higher than code then crawlers can access our entire blog to index and crawl all of its content and web content.

Here Allow: / refers to the Homepage which means net crawlers will crawl and index our blog’s homepage.

Disallow specific Post

Now suppose if we wish to exclude a selected post from indexing then we are able to add below lines within the code.

Disallow: /yyyy/mm/post-url.htmlHere yyyy and mm refers to the publishing year and month of the post severally. for instance if we've got revealed a post in year 2013 in month of March then we've got to use below format.

Disallow: /2013/03/post-url.htmlTo make this task straightforward, you'll merely copy the post computer address and take away the blog name from the start.

Disallow specific Page

If we want to require a selected page then we are able to use a similar methodology as higher than. merely copy the page computer address and take away blog address from it which can one thing appear as if this:

Disallow: /p/page-url.html• Sitemap: http://example.blogspot.com/feeds/posts/default?orderby=UPDATED

This code refers to the sitemap of our blog. By adding sitemap link here we have a tendency to square measure merely optimizing our blog’s crawling rate. suggests that whenever the online crawlers scan our robots.txt file they're going to notice a path to our sitemap wherever all the links of our present posts gift. present crawlers can notice it straightforward to crawl all of our posts. Hence, there are higher possibilities that web crawlers crawl all of our web log posts while not ignoring one one.

Note: This sitemap can solely tell the online crawlers regarding the recent twenty five posts. If you wish to extend the quantity of link in your sitemap then replace default sitemap with below one. it'll work for initial five hundred recent posts

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

If you have got over five hundred revealed posts in your web log then you'll use 2 sitemaps like below:

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=1&max-results=500

Sitemap: http://example.blogspot.com/atom.xml?redirect=false&start-index=500&max-results=1000

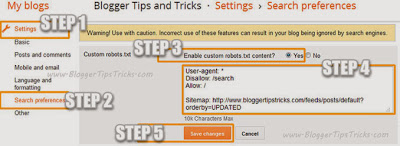

ADDING CUSTOM ROBOTS.TXT TO BLOGGER

Now the most a part of this tutorial is the way to add custom robots.txt in blogger. therefore below area unit steps to feature it.

1. move to your blogger blog.

2. Navigate to Settings >> Search Preferences ›› Crawlers and classification ›› Custom robots.txt ›› Edit ›› yes

3. currently paste your robots.txt file code within the box.

4. Click on Save Changes button.

5. you're done!

HOW TO CHECK YOUR ROBOTS.TXT FILE?

You can check this file on your blog by adding /robots.txt eventually to your web log address within the browser. Take a glance at the below example for demo.

http://learn-tipandtrick.blogspot.com/robots.txt

Once you visit the robots.txt file address you may see the whole code that you're using in your custom robots.txt file. See below image.

• Recommended Post for you: six Blogger improvement Tips For SEO – final Guide!

FINAL WORDS!

This was the today’s complete tutorial on the way to add custom robots.txt move into blogger. i actually attempt with my heart to form this tutorial as straightforward and informative as potential. however still if you've got any doubt or question then be happy to ask me. Don’t place any code in your custom robots.txt settings while not knowing concerning it. merely raise to American state to resolve your queries. I’ll tell you everything well. Thanks guys to read this tutorial. If you prefer it then please support me to unfold my words by sharing this post on your social media profiles. Happy Blogging!

0 comments:

Post a Comment